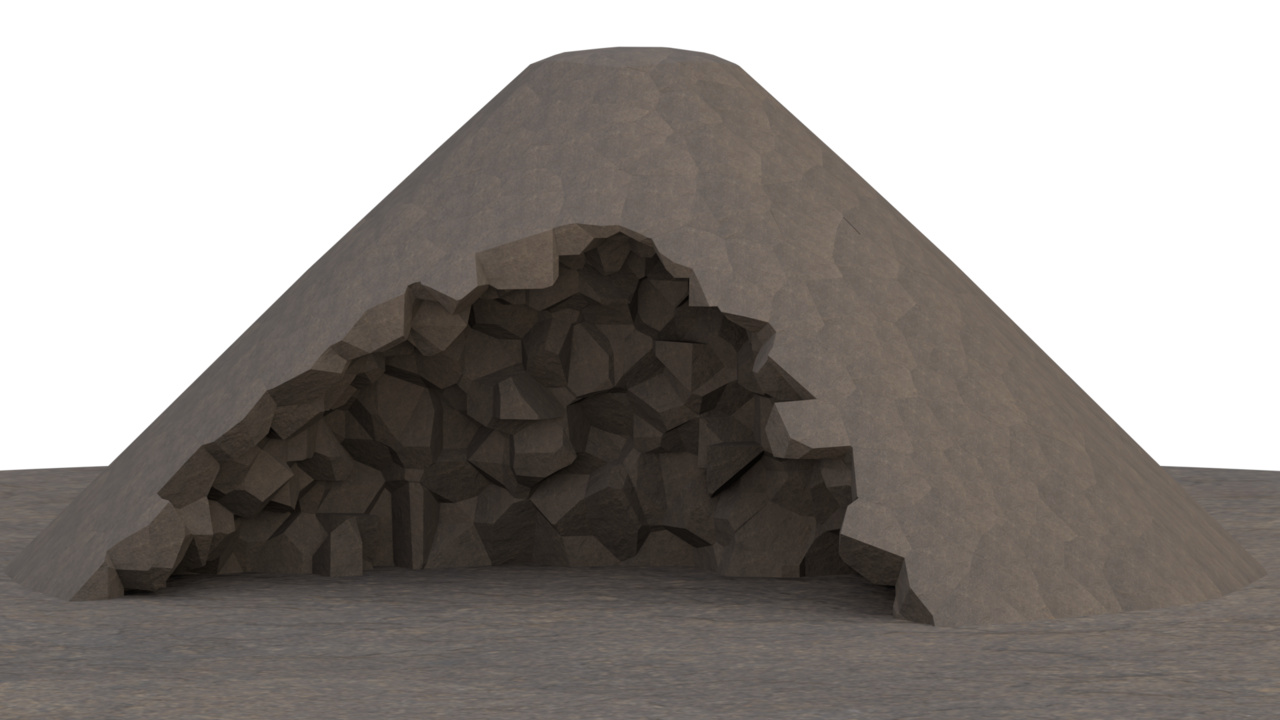

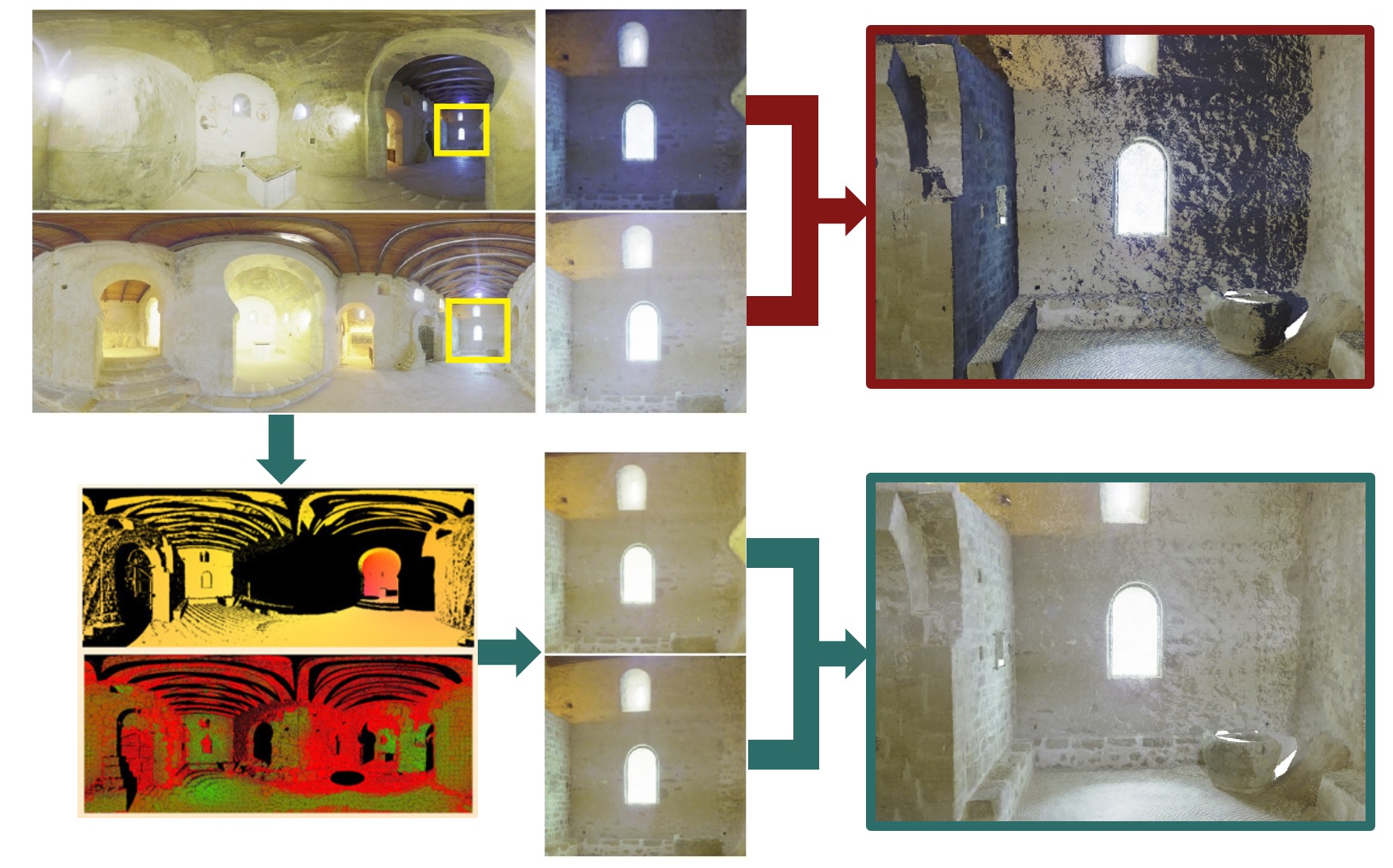

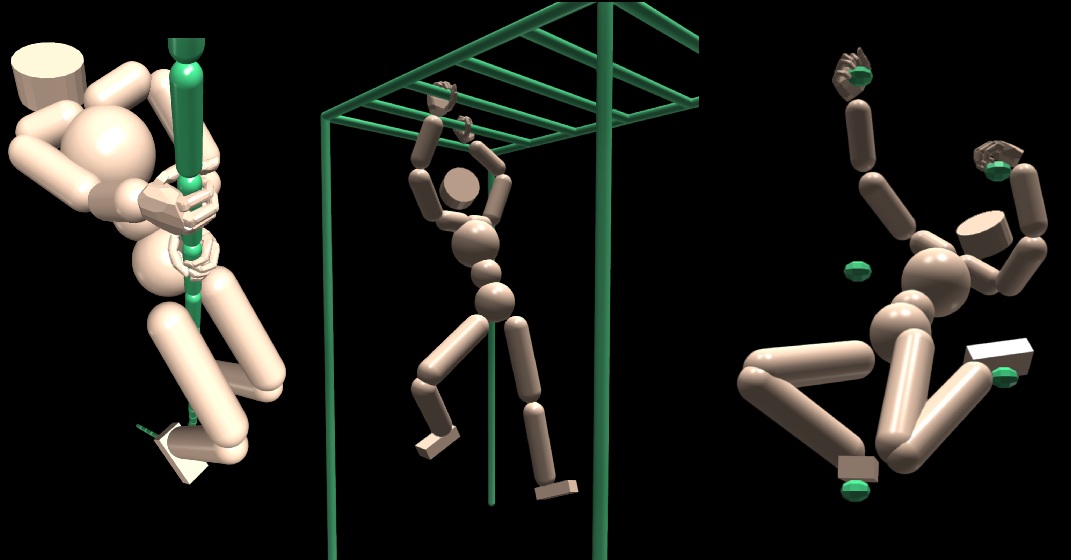

Performing everyday tasks requires both large-scale body movements driven by the arms, legs, and torso, and fine motor skills, particularly in the hands. However, existing reinforcement learning approaches often struggle to efficiently acquire these diverse motion skills from scratch, leading to slow convergence or suboptimal policies. We observed that the motor skills of distinct body parts exhibit a certain level of independence. This suggests the potential advantage of independently pretraining specific dexterous limbs prior to their integration in full-body motion tasks. Inspired by this, we present Part-wise Heterogeneous Agents (PHA), a cooperative multi-agent reinforcement learning approach where body parts are treated as independent agents, allowing for specialized skill acquisition and cooperative execution of complex full-body tasks. Furthermore, our method enables the pretraining of fine motor skills, such as gripping a bar or grabbing a climbing hold, before integrating them with other body parts for complex whole-body coordination, thus introducing part-wise Reusable Policy Priors. We tested our technique on challenging tasks such as rope climbing, rock bouldering and traversing a horizontal ladder. Our approach not only accelerates convergence, but also improves overall policy quality, achieving motion tasks that single-agent approaches struggle to solve. Our results also demonstrate adaptability, enabling Reusable Policy Priors to adjust their policies to successfully perform complex tasks in scenarios not seen during training.

@article{Carranza2025pha,

title = {PHA: Part-wise Heterogeneous Agents with Reusable Policy Priors for Physics-Based Motion Synthesis},

author = {Carranza, Luis and Argudo, Oscar and Andujar, Carlos},

journal = {Proceedings of the ACM on Computer Graphics and Interactive Techniques (SCA 2025)},

year = {2025},

volume = {8},

number = {4},

pages = {22},

doi = {10.1145/3747870},

}