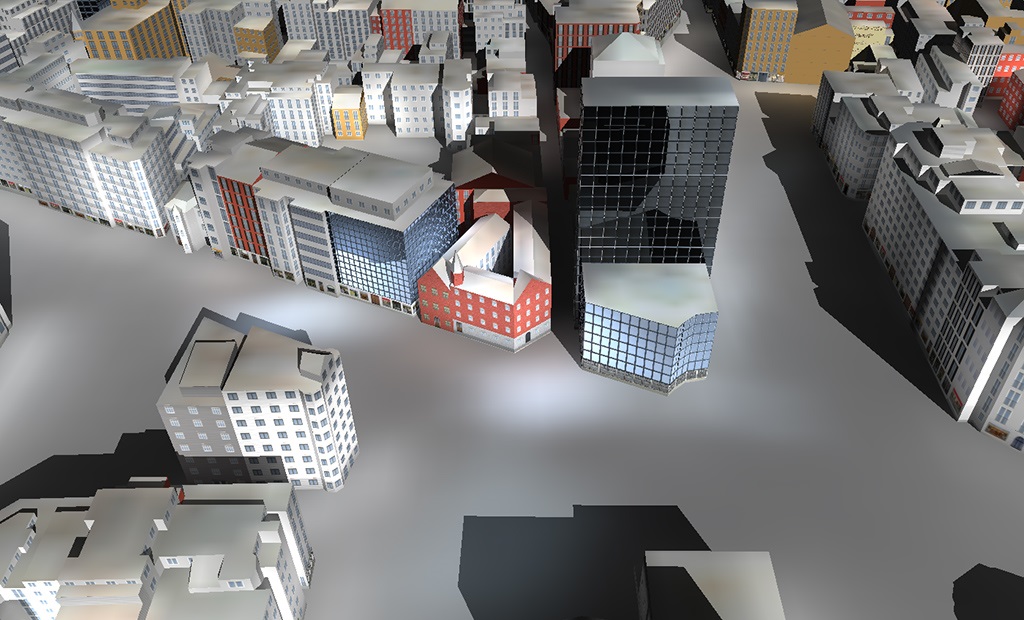

Realistic reconstruction and rendering of detailed 3D scenarios from multiple data sources

O. Argudo (Advisors: C. Andujar, A. Chica)

PhD thesis, Universitat Politècnica de Catalunya, 2018

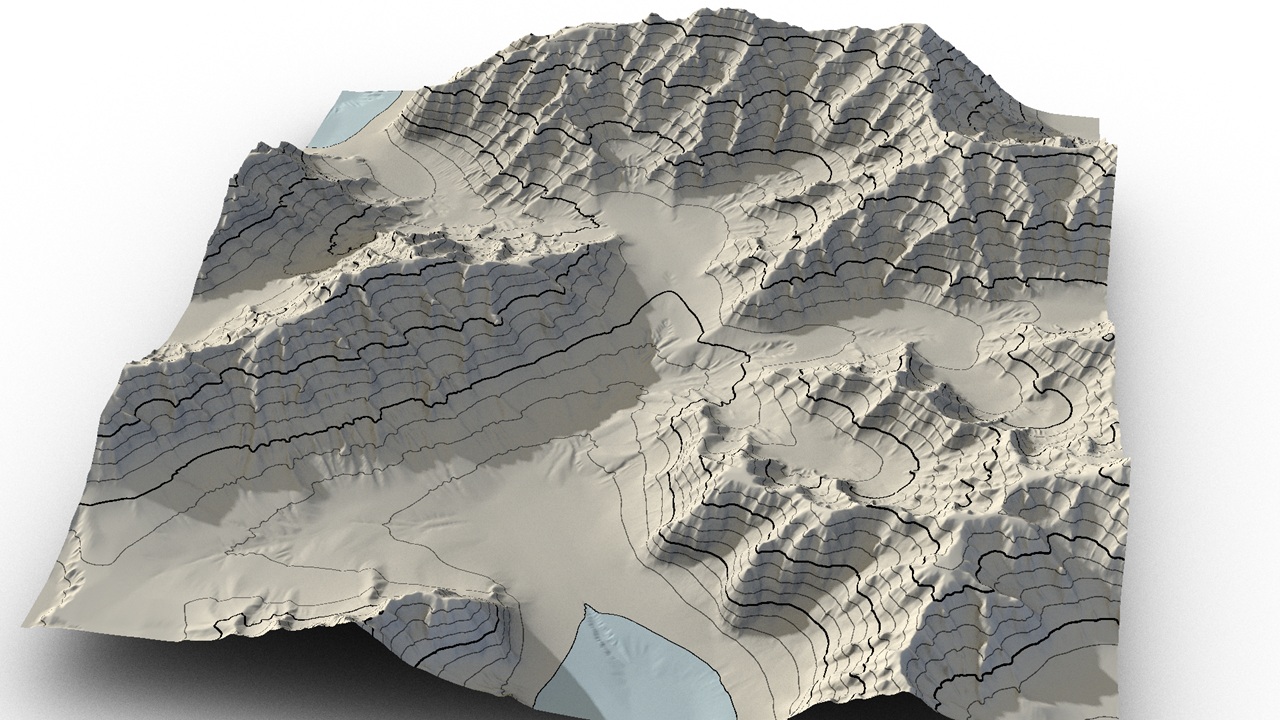

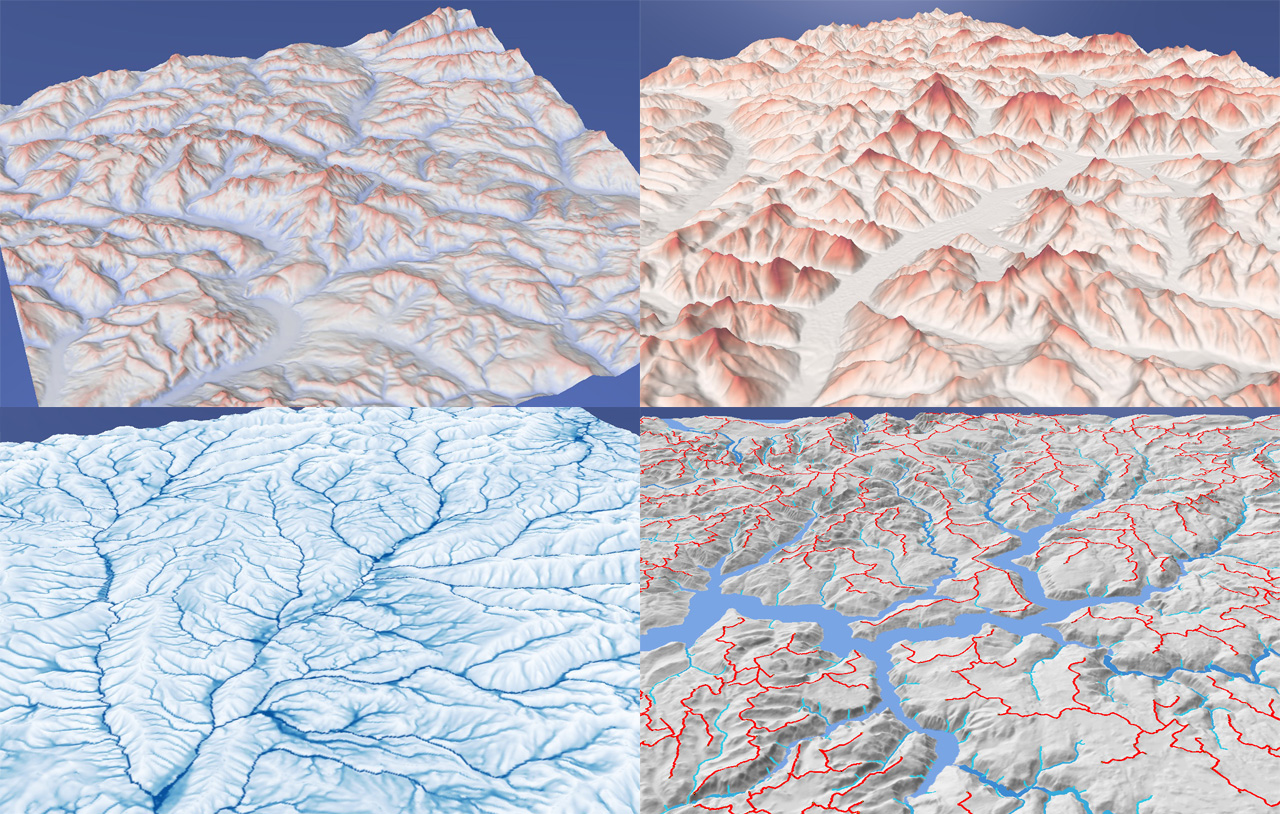

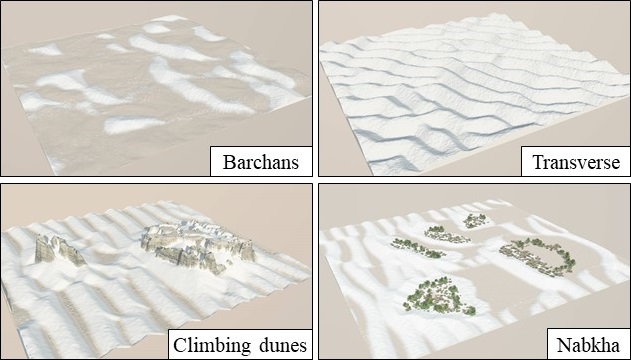

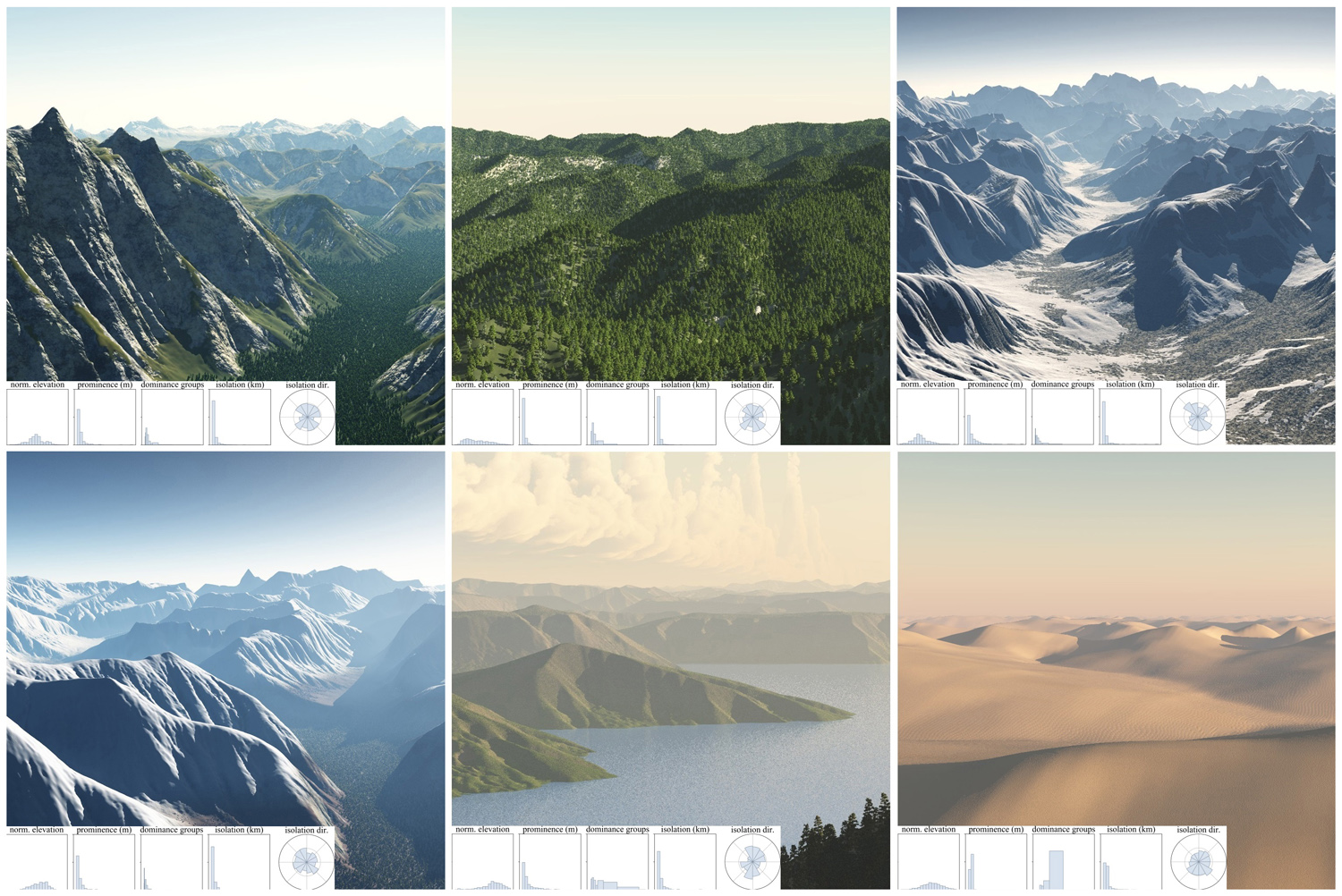

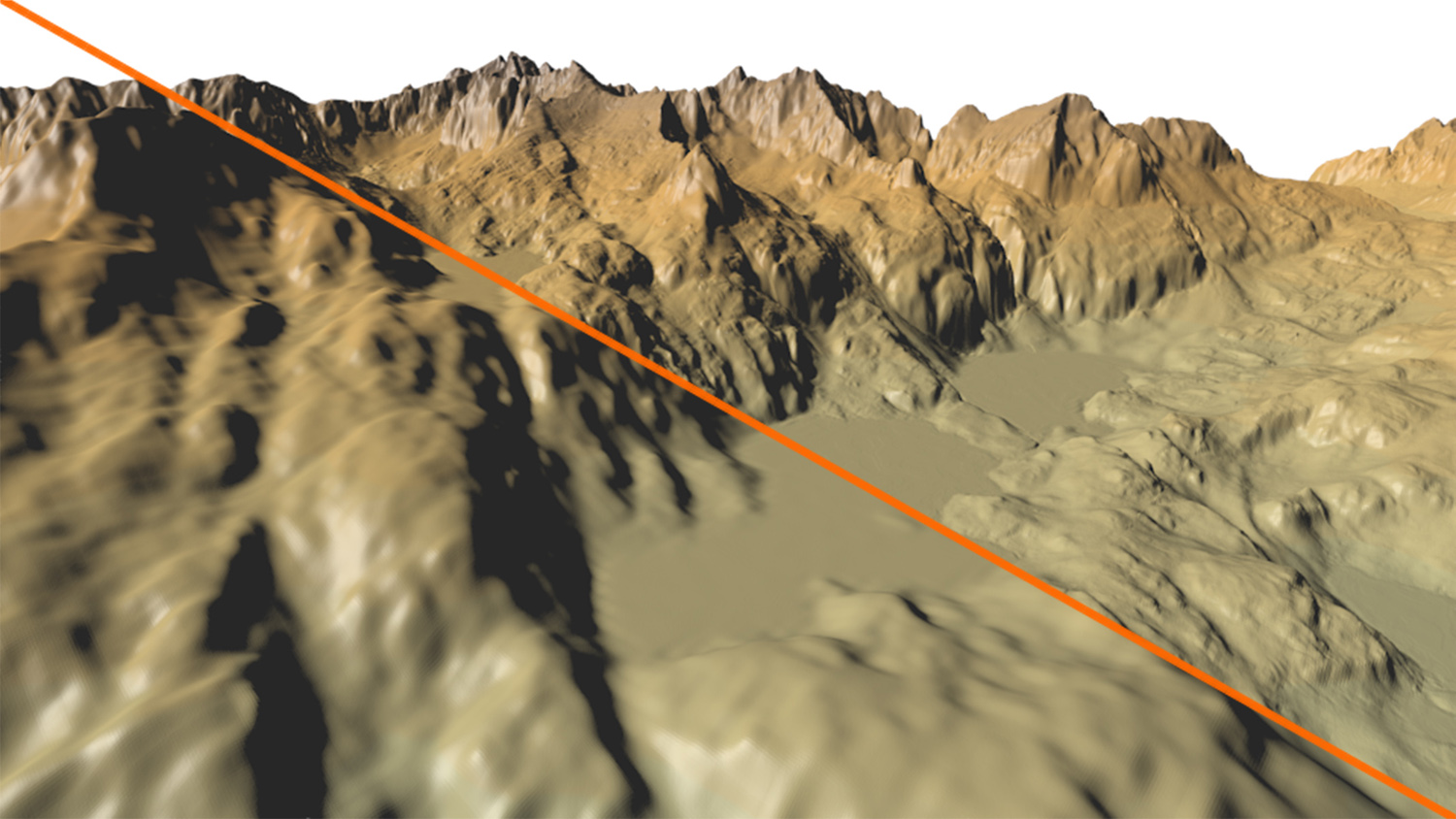

During the last years, we have witnessed significant improvements in digital terrain modeling, mainly through photogrammetric techniques based on satellite and aerial photography, as well as laser scanning. These techniques allow the creation of Digital Elevation Models (DEM) and Digital Surface Models (DSM) that can be streamed over the network and explored through virtual globe applications like Google Earth or NASA WorldWind. The resolution of these 3D scenes has improved noticeably in the last years, reaching in some urban areas resolutions up to 1m or less for DEM and buildings, and less than 10 cm per pixel in the associated aerial imagery. However, in rural, forest or mountainous areas, the typical resolution for elevation datasets ranges between 5 and 30 meters, and typical resolution of corresponding aerial photographs ranges between 25 cm to 1 m. This current level of detail is only sufficient for aerial points of view, but as the viewpoint approaches the surface the terrain loses its realistic appearance.

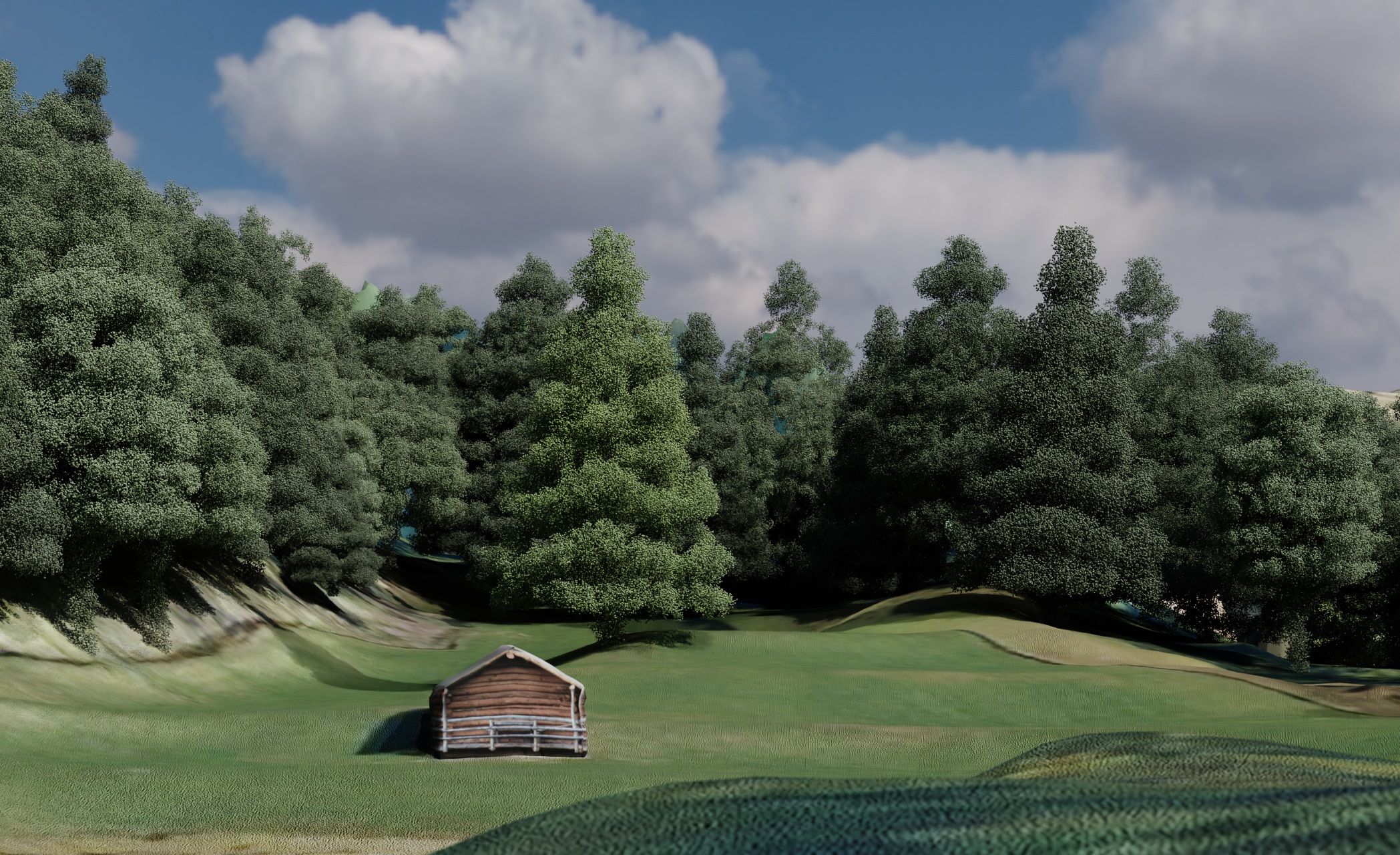

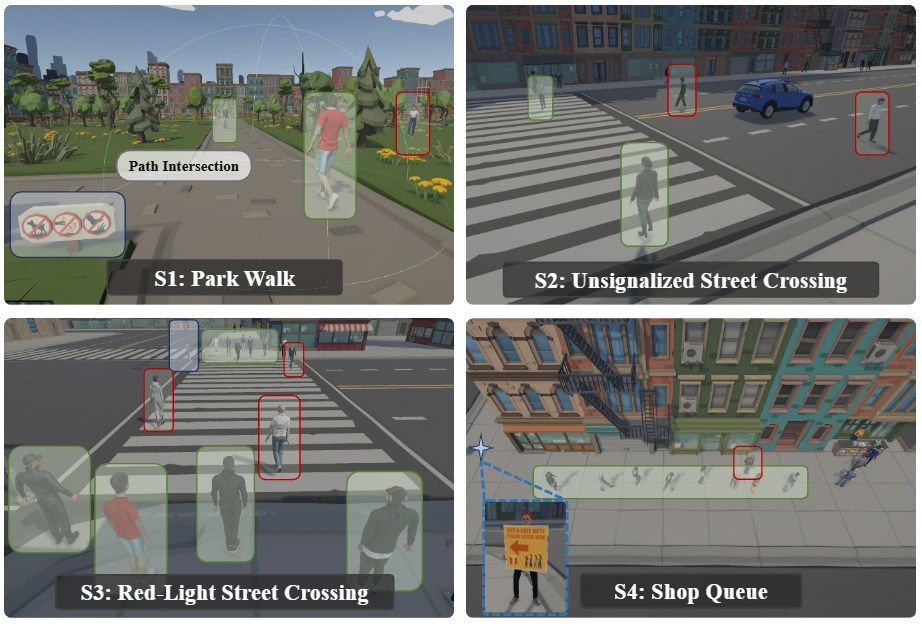

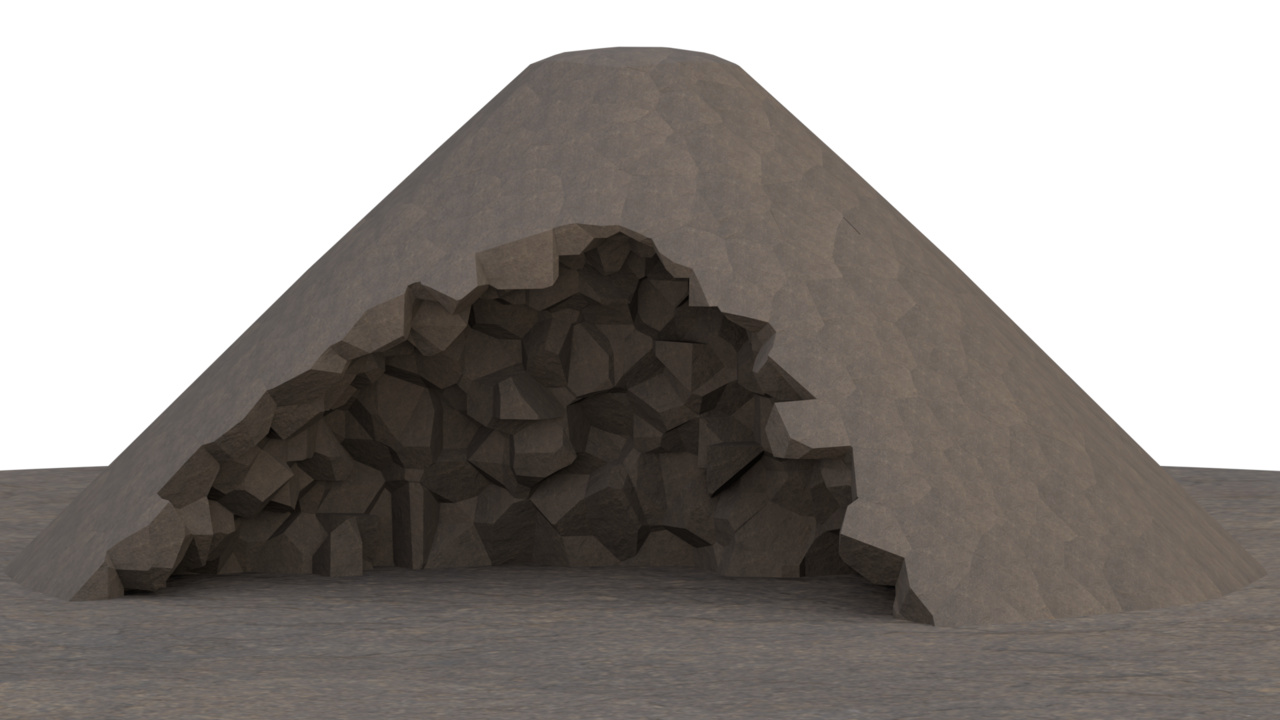

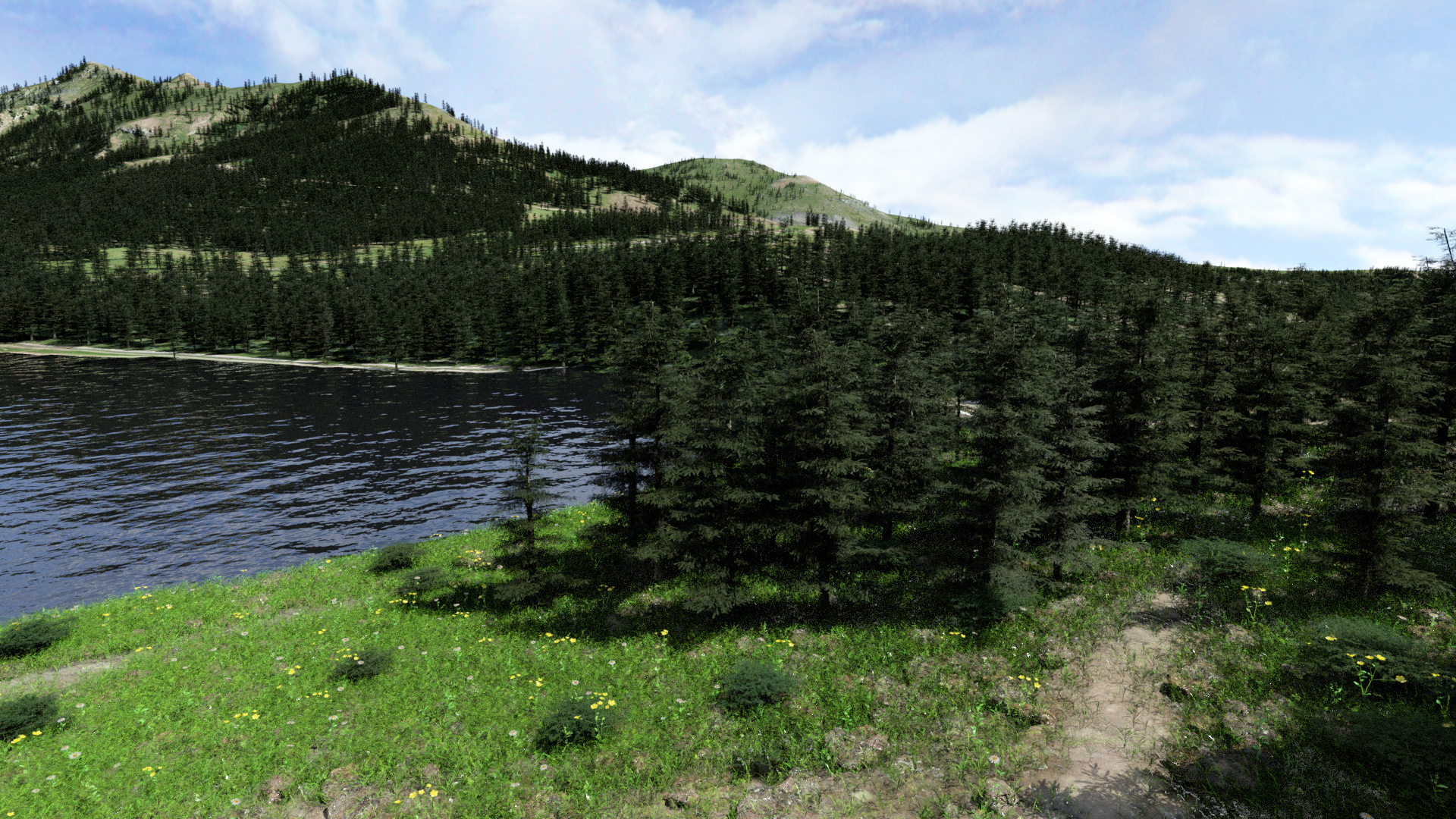

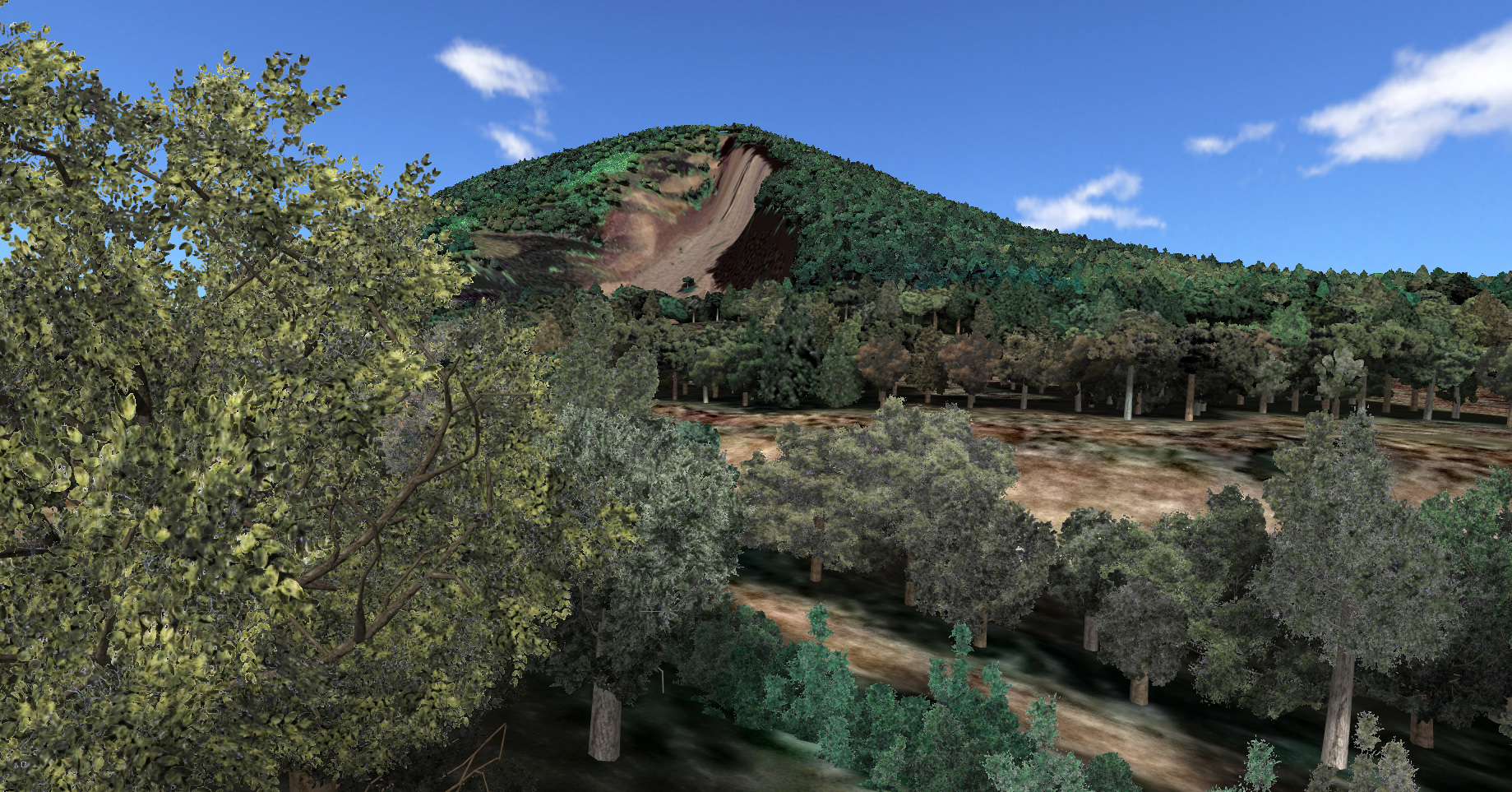

One approach to augment the detail on top of currently available datasets is adding synthetic details in a plausible manner, i.e. including elements that match the features perceived in the aerial view. By combining the real dataset with the instancing of models on the terrain and other procedural detail techniques, the effective resolution can potentially become arbitrary. There are several applications that do not need an exact reproduction of the real elements but would greatly benefit from plausibly enhanced terrain models: videogames and entertainment applications, visual impact assessment (e.g. how a new ski resort would look), virtual tourism, simulations, etc.

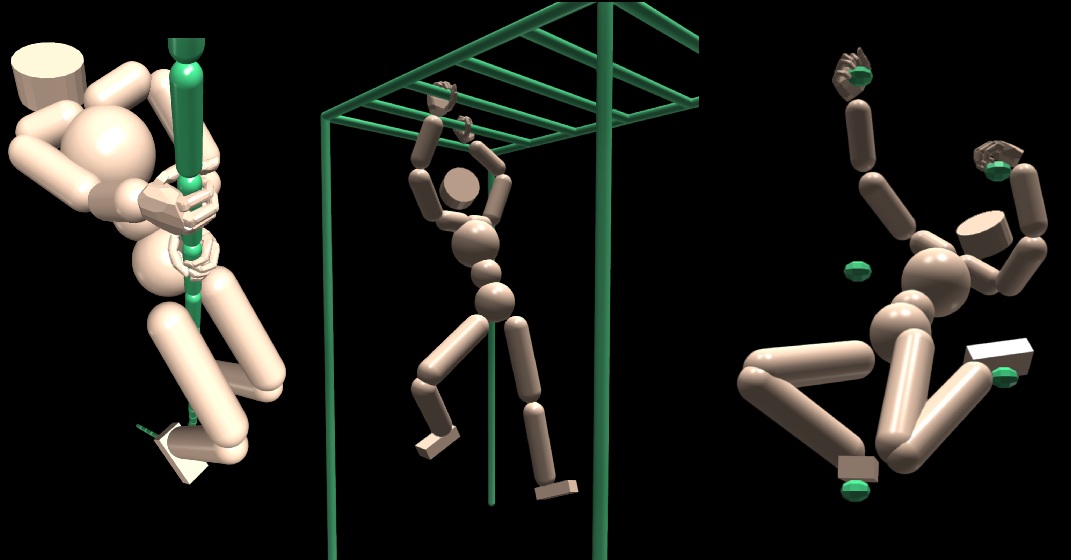

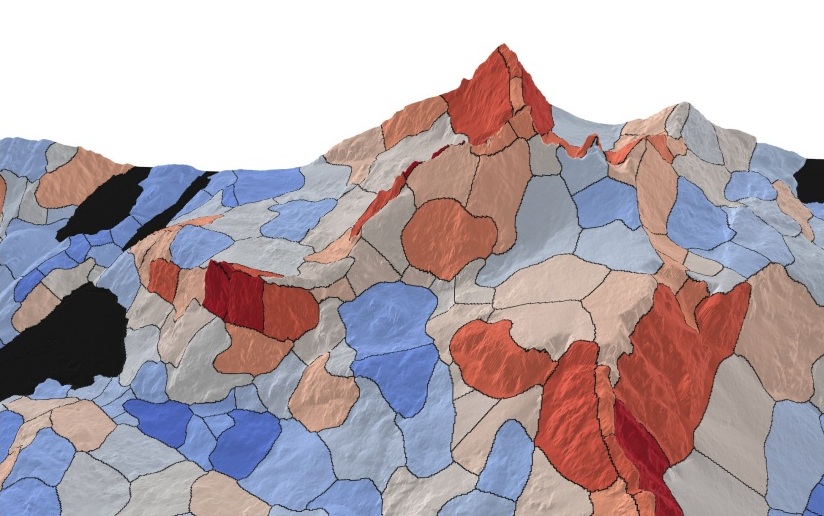

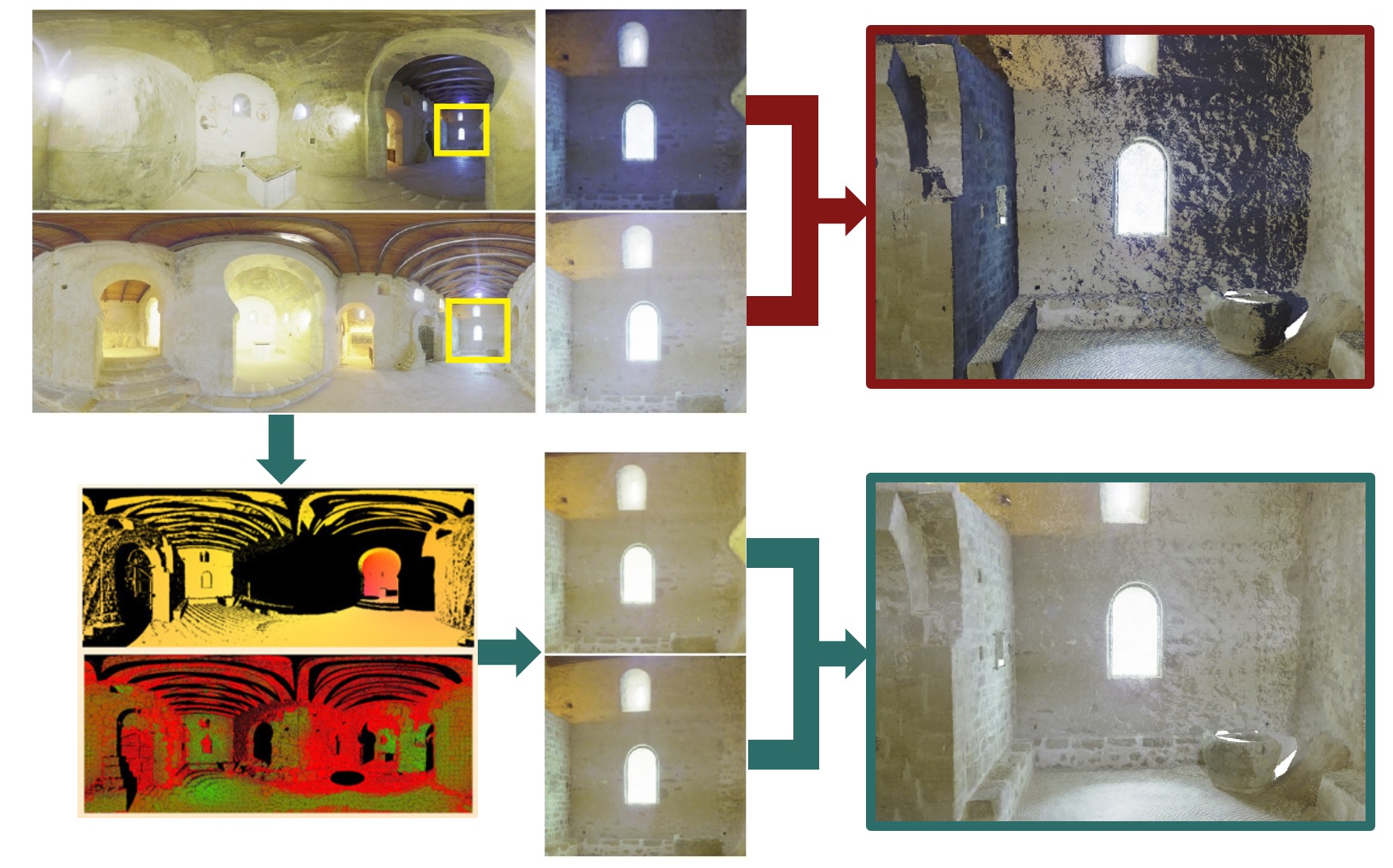

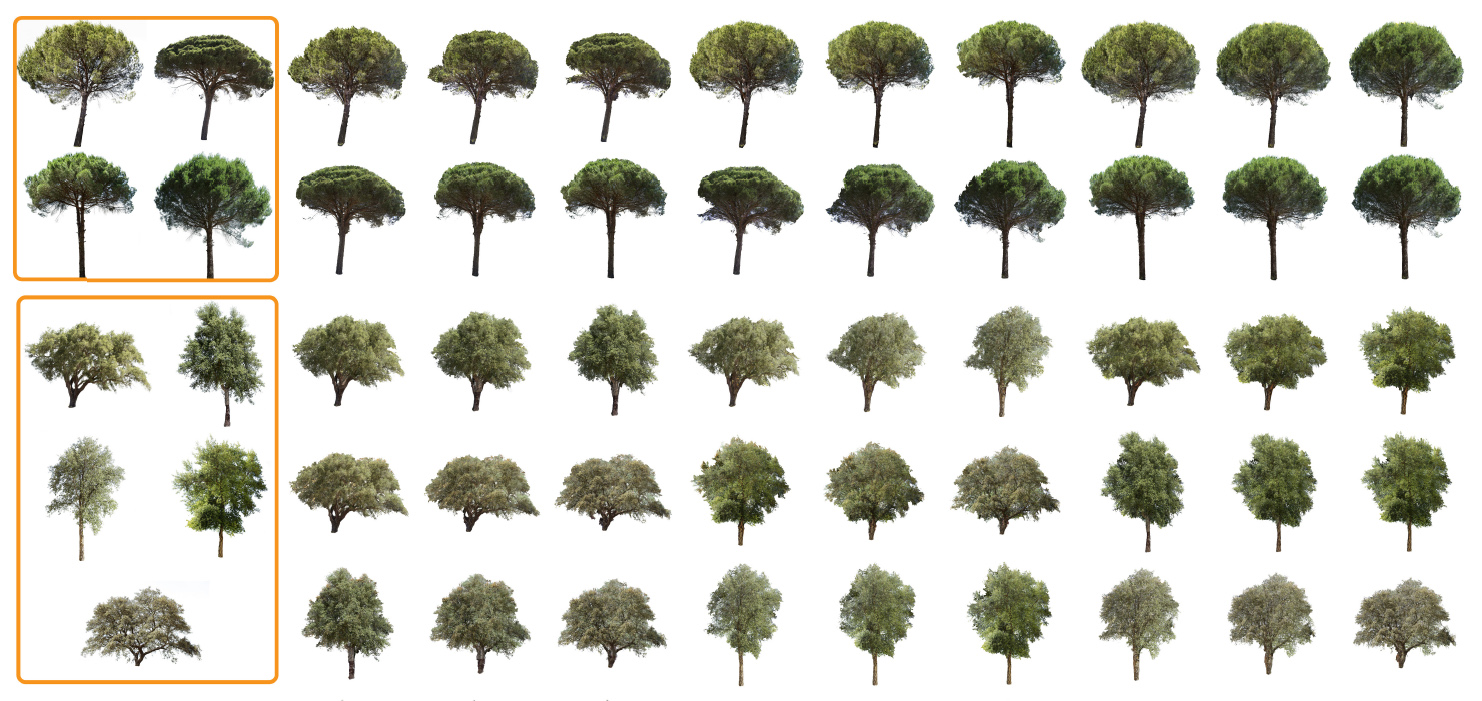

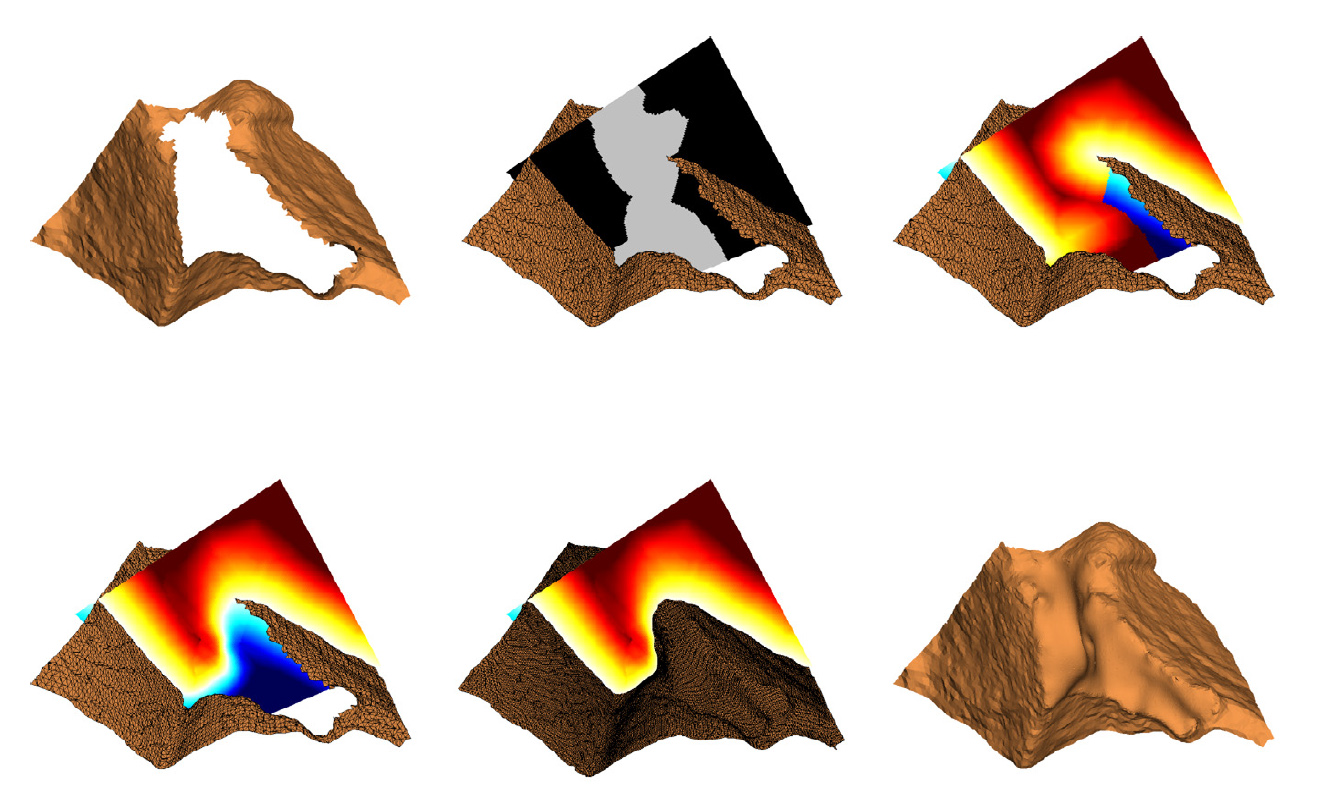

In this thesis we propose new methods and tools to help the reconstruction and synthesis of high-resolution terrain scenes from currently available data sources, in order to achieve realistically looking ground-level views. In particular, we decided to focus on rural scenarios, mountains and forest areas. Our main goal is the combination of plausible synthetic elements and procedural detail with publicly available real data to create detailed 3D scenes from existing locations. Our research has focused on the following contributions: - An efficient pipeline for aerial imagery segmentation - Plausible terrain enhancement from high-resolution examples - Super-resolution of DEM by transferring details from the aerial photograph - Synthesis of arbitrary tree picture variations from a reduced set of photographs - Reconstruction of 3D tree models from a single image - A compact and efficient tree representation for real-time rendering of forest landscapes